Efficiently Running Meta-Llama-3 on Mac Silicon (M1, M2, M3)

Link to Jupyter Notebook: GitHub page

Training LLMs locally on Apple silicon: GitHub page.

Introduction

In the rapidly advancing field of artificial intelligence, the Meta-Llama-3 model stands out for its versatility and robust performance, making it ideally suited for Apple’s innovative silicon architecture. The use of the MLX framework, optimized specifically for Apple’s hardware, enhances the model’s capabilities, offering developers an efficient tool to leverage machine learning on Mac devices. This tutorial not only guides you through running Meta-Llama-3 but also introduces methods to utilize other powerful applications like OpenELM, Gemma, and Mistral.

Getting Started

To get started with running Meta-Llama-3 on your Mac silicon device, ensure you're using a MacBook with an M1, M2, or M3 chip. You should set up a Python virtual environment. Then run the following commands in your terminal or in Jupyter Notebook to install the required packages:

pip install — upgrade ipywidgets

pip install torch

pip install mlx-lmThese commands will equip your environment with the tools needed to utilize the Meta-Llama-3 model as well as other LLMs like Gemma.

Section 1: Loading the Meta-Llama-3 Model

Here we will load the Meta-Llama-3 model using the MLX framework, which is tailored for Apple’s silicon architecture. MLX enhances performance and efficiency on Mac devices. Here is how you can load the model:

from mlx_lm import load

# Define your model to import

model_name = "mlx-community/Meta-Llama-3-8B-Instruct-4bit"

# Loading model

model, tokenizer = load(model_name)In this code snippet, the load function is designed to get and prepare both the model and its tokenizer from the MLX community repository hosted on Hugging Face. The specified model, mlx-community/Meta-Llama-3–8B-Instruct-4bit, is a 4-bit quantized version, which means it uses less memory and computes faster while maintaining adequate accuracy.

The MLX Community on Hugging Face, hosts a variety of models optimized for Mac silicon, including models like Gemma, OpenELM, and Mistral, each designed for specific applications ranging from natural language understanding to more complex inference tasks. To explore these models, you can copy the name from there and test it in your setup.

Section 2: Generating Basic Responses

Once the Meta-Llama-3 model is loaded, you can generate responses using the generate function. Here’s a simple example to get you started:

# Generate a response from the model

response = generate(model, tokenizer, prompt="hello")

# Output the response

print(response)Using the prompt “hello” with the Meta-Llama-3 model and parameters we used above, you should get a strange story about Sarah in a 1950s U.S. town, exploring identity themes.

Section 3: Advanced Example — Solving Mathematical Problems

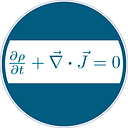

To showcase a more complex application of the Meta-Llama-3 model, let’s explore how it can solve a mathematical problem involving calculus. This example will involve setting a specific user scenario and processing complex user input through the model. Below is the complete code to manage this task:

# Define the role of the chatbot

chatbot_role = "You are a math professor chatbot. You answer with clear step by step solutions."

# Define a mathematical problem

math_question = "Please compute the integral of f(x) = exp(-x^2) over the x domain of (- infinity, infinity), use LaTeX."

# Set up the chat scenario with roles

messages = [

{"role": "system", "content": chatbot_role},

{"role": "user", "content": math_question}

]

# Apply the chat template to format the input for the model

input_ids = tokenizer.apply_chat_template(messages, add_generation_prompt=True)

# Decode the tokenized input back to text format to be used as a prompt for the model

prompt = tokenizer.decode(input_ids)

# Generate a response using the model

response = generate(model, tokenizer, max_tokens=512, prompt=prompt)Process walkthrough:

- 1. Scenario Configuration: The

messagesvariable simulates a chat where the model adopts a math professor’s role (system,content) to guide its response style, whileuser,contentpresents the actual query (math_question). - 2. Tokenization and Template Application: Using

tokenizer.apply_chat_template, we format the roles and contents into a structure that the model can interpret. - 3. Prompt Preparation: The formatted input is then decoded back to text (

prompt), setting the stage for model interaction. - 4. Response Generation: The

generatefunction processes the prompt to produce a detailed response, controlled bymax_tokens.

Output:

from IPython.display import Markdown

# Printing models response using Markdown cell formatting

Markdown(response)Conclusion

This tutorial showcased the capabilities of the Meta-Llama-3 model using Apple’s silicon chips and the MLX framework, demonstrating how to handle tasks from basic interactions to complex mathematical problems efficiently. Explore the MLX Community hub, experiment with different models and settings, and leverage the power of this setup to enhance your machine learning projects on Mac devices.